One of the key emerging threats we see increasingly facing organisations in 2021 is disinformation. Popularly known as “fake news”, disinformation is the online delivery of distorted information to influence a target group or individual.

In the year ahead, more organisations will be targeted by “disinformation-for-hire” threat actors to damage reputations, operations, and revenue. They will increasingly leverage artificial intelligence (AI) and related techniques to appear increasingly legitimate to technical and manual review. Organisations will find it increasingly hard to navigate this threat landscape as technology, regulation and geopolitics have a profound effect on the conduct and impact of disinformation.

From states, to societies, to organisations

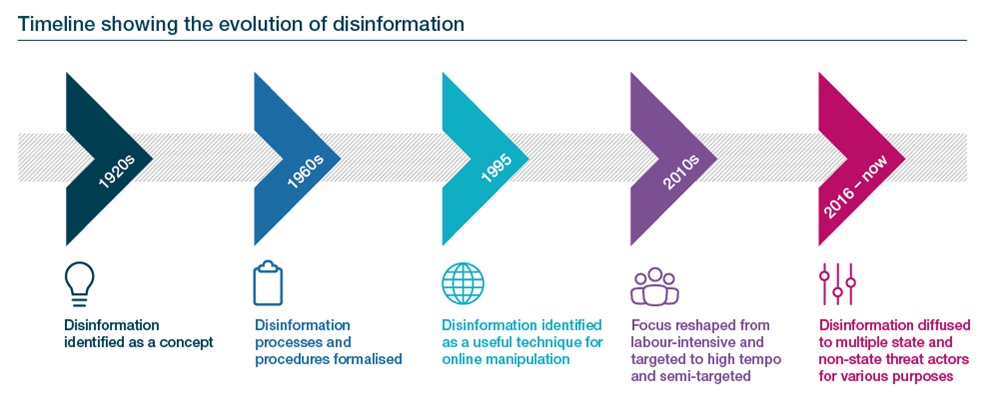

Some disinformation can be directed or encouraged by foreign state actors, but a large proportion is conducted by non-state and domestic actors. The use of disinformation has grown since 2016 to become a threat to political systems and wider societies – in 2021, it will increasingly threaten organisations.

Disinformation increasingly relies on AI…

As social media platforms have increasingly identified disinformation, threat actors have gone to extra lengths to appear legitimate. Several networks identified in 2020 used genuine content to establish credibility, before then posting disinformation. One notable example of this is Peace Data, a seemingly legitimate website that employed unwitting journalists as a front for the Russian state-linked Internet Research Agency (IRA).

A second notable change in 2020 was the increased effectiveness of artificial narrow intelligence (ANI). The first takedown of an ANI-generated disinformation network was in December 2019, but this was quickly followed by multiple others used in domestic and foreign operations.

Both developments are likely to have an impact for organisations, due to the ease with which disinformation can now target organisations at a large scale. Potential future examples are the co-ordination of seemingly legitimate “mass movements” to affect stock prices, or creating digital ecosystems to attack an organisation’s reputation.

… and is increasingly outsourced to third parties

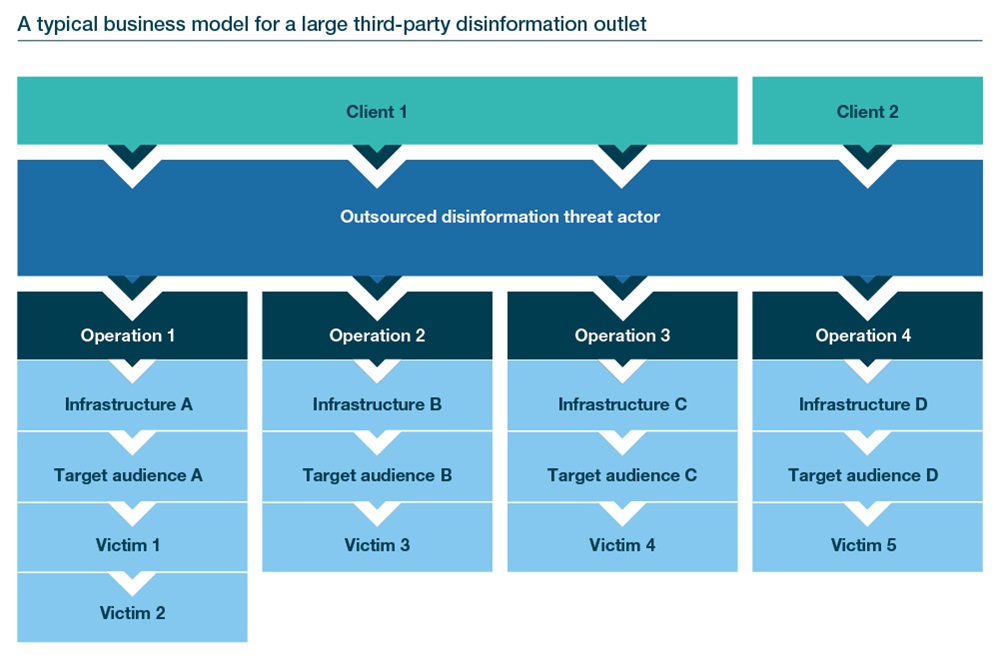

Over the past two years, we have identified a growing number of third parties conducting disinformation on behalf of clients (see below); such services also offer plausible deniability to the ultimate sponsor. Perhaps the most infamous is the IRA, widely accused of attempting to interfere in the 2016 US presidential election.

Most threat actors lack the resources of the IRA but can be much more targeted. They also vary from unsophisticated troll farms to international public relations firms. Research has identified these entities offering services in 48 countries in 2020 – double the previous year.

Technology will continue to both enable and counter disinformation

In the coming year, technology will be a key driver for disinformation. Deepfakes – the use of AI-based technology to produce artificial video or audio content that appears convincingly lifelike – already offer a clear example of how emerging technologies can have real-world impact.

In 2021 we expect to see more threat actors leveraging voice biometrics to impersonate individuals, as well as trying to gain access to voice biometric databases. In September 2019, for example, we assessed a campaign in which cybercriminals defrauded an energy company of USD 243,000 by using AI software to mimic the voice of the CEO of the organisation’s parent company.

To counter disinformation, technologies such as digital watermarking (tagging visual content) are likely to become more widely used. Co-operation is also likely to increase between news outlets, social media platforms and tech companies.

Regulation will shape the operating environment, increase moves to alternative platforms

The second key driver in 2021 will be the increased regulation of social media. Data protection laws, such as the EU’s General Data Protection Regulation, can limit how social media platforms curate personal information; by limiting access to information that enables targeted advertising, such laws can protect individuals from being victims of disinformation.

The EU’s Code of Practice on Disinformation will also increase transparency over how online platforms combat disinformation. Actions taken by social media companies in 2021 are already shaping further action by governments. As these measures increase the pressure on threat actors, we anticipate that more disinformation will migrate to alternative platforms that are less scrutinised.

Disinformation-as-a-service increasingly tied to geopolitics; commercial entities increasingly likely to be targeted

With a growing disinformation-as-a-service market, more non-state and private commercial organisations will hire the services of these threat actors. One tactic likely to see increased use is the abuse of adverts on social media to target the customers of commercial products and services (see below for the typical business model of a third-party disinformation outlet). However, in practice, infrastructure, target audiences and ultimate victims will very often overlap.

An increase in the number of clients for outsourced third parties will continue to make some disinformation easier to detect, despite advances in techniques and technology. Tied to this is an increase in competition between disinformation networks targeting the same audiences, which is highly likely to increase in 2021. For example, Facebook in December 2020 said it had identified state-linked disinformation threat actors linked to France and Russia targeting the same audiences, while simultaneously attempting to expose the other.

How can organisations protect themselves?

Organisations should:

- Engage stakeholders across internal departments to allow employees to effectively and quickly counter disinformation.

- Collaborate with industry peers if they are likely to be targeted by similar campaigns.

- Formulate an incident response plan for communicating a counter-narrative to employees, external stakeholders, and clients.

- Remain aware that, despite the above, not all negative activity online will be the result of disinformation.

- Continue to follow coverage of disinformation and emerging technology-related threats on Seerist.